More Web Scraping

In the previous post in this series we covered the fundamentals of HTML and CSS, how to identify data-containing HTML elements using CSS selectors, and a very basic workflow for scraping text data from a single web page and parsing it into an R data frame.

In this post we are going cover the process of scraping data from a sequential series of pages and continue working-up the code we need to build a set of data consisting of 7 variables for all movies in the IMDb List of Top Rated Sci-Fi Movies.

Note: I am going assume that anyone following this post from this point will have been through the first post, has installed and loaded the dplyr and rvest packages into R, and has all of the code chunks from last time stored in a script. If not, go follow the first post, swipe all the code and let’s scrape ourselves some more data.

Scraping Data from Sequential Web Pages

The key to scraping data from a series of sequential pages lies in realising that the URLs for sequential pages are usually very similar to one another. This means all we need to do is figure out how they change so that we can iteratively alter the dynamic components inside our script, visit the URL, perform scraping tasks, store the data, rinse and repeat. We are going to automate this process.

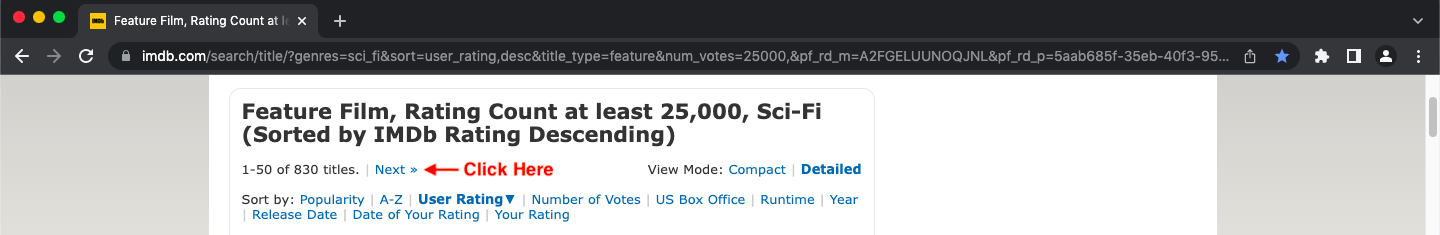

First, navigate to the IMDb link above, find the “Next »” hyperlink just below the masthead at the top of the page and give it a click:

Next, copy the URL from the address bar and paste it into a text editor, then go back to your browser, click “Next »” again and do the same thing, pasting the second URL below the last one. You should end up with this:

# spot the difference

https://www.imdb.com/search/title/?title_type=feature&num_votes=25000,&genres=sci-fi&sort=user_rating,desc&start=51&ref_=adv_prv

https://www.imdb.com/search/title/?title_type=feature&num_votes=25000,&genres=sci-fi&sort=user_rating,desc&start=101&ref_=adv_prv

Comparing the two URLs, you should notice that the only difference is the number contained after the string of characters “desc&start=” towards the end of each URL; it changes from 51 in the first to 101 in the second.

If you are keen-eyed you may have noticed that the URL of the page we visited for the first 50 movies looks different to the ones we have just examined:

https://www.imdb.com/search/title/?genres=sci_fi&sort=user_rating,desc&title_type=feature&num_votes=25000,&pf_rd_m=A2FGELUUNOQJNL&pf_rd_p=5aab685f-35eb-40f3-95f7-c53f09d542c3&pf_rd_r=9XADGPNFYQM0JP3Q5N2R&pf_rd_s=right-6&pf_rd_t=15506&pf_rd_i=top&ref_=chttp_gnr_17

No drama. Take one of the URLs we copied above and swap either the number 51 or 101 for the number 1 so you get this:

https://www.imdb.com/search/title/?title_type=feature&num_votes=25000,&genres=sci-fi&sort=user_rating,desc&start=1&ref_=adv_prv

Now copy this and paste it into your browser address bar, hit return, and you will land at the very same top-level page for the first 50 movies. Great! This common URL structure means we can iteratively swap the number in the URL to specify the top-level page we want to access and scrape.

More experienced readers will know there are multiple suitable approaches for repeatedly executing lines of code (for-loops, while-loops, the map and apply functions in R and Python to name just a few) and several variations on each of these. The structure and function of a for-loop is relatively easy for less experienced users to understand, so that’s what we are going to use for this purpose. We will see exactly how a basic for-loop works shortly.

Before we run a for-loop we first need to define a sequence over which the for-loop will iterate; these are page numbers in this case. There are 834 entries in the IMDb List of Top Rated Sci-Fi Movies at the time of writing, so the final top-level page will start at 801 because there are 50 list entries displayed per page. We therefore need to generate a sequence of 17 numbers from 1 to 801 with intervals of 50, which can be achieved in R like this:

# create a sequence of top-level page numbers to use in the loop

top_level_numbers <- seq(from = 1, to = 801, by = 50)

Now we can construct a for-loop template. Take a look at the following basic example:

for (number in top_level_numbers) {

# tell me which entries the loop is currently working on

cat("Working on entries beginning with entry", number, "\n")

# alter the URL by substituting the page number

url <- paste0("https://www.imdb.com/search/title/?title_type=feature&num_votes=25000,&genres=sci-fi&sort=user_rating,desc&start=",

number,

"&ref_=adv_prv")

# show me the URL that has been created

cat("The URL for these entries is:", url, "\n")

}

This for-loop works by iterating over each single value in top_level_numbers and does the following:

- Assigns the first value in

top_level_numbersto thenumbervariable - Prints a message directly to the console telling us which

numberthe loop is currently working on - Creates the

urlvariable by concatenating thenumbervariable and two static character strings - Prints a second message directly to the console showing the URL that has just been created

- Assigns the next sequential value in

top_level_numbersto thenumbervariable and repeats until the code in the loop has been executed once for every value intop_level_numbers

The "\n" in each call to the cat() function simply ensures each message is printed to the console on a new line.

Now we know how a for-loop works and have some console output from which we can track the progress of our web-scraping program.

Next, we need to add another couple of lines of code before the for-loop to create an object in which the for-loop can store output data on each successive iteration:

# create a sequence of top-level page numbers to use in the loop

top_level_numbers <- seq(from = 1, to = 801, by = 50)

# create storage object of length equal to output object - preallocation of memory

store_data <- vector(mode = "list", length = length(top_level_numbers))

# name the list entries by number enabling these to be called and data assigned on each loop iteration

names(store_data) <- top_level_numbers

The process of creating an object with the required dimensions to store all data output in this way is called “preallocation of memory”. This is not mandatory in R but it will vastly improve the speed and performance of your programs. I will cover this topic in more detail in a future post about iterative control flow statements and function families that serve this purpose.

Almost done. If we now add the code you should have from the first post into the for-loop body, as well as a couple of lines for storing the scraped data inside the storage object we have just created, then we are done in terms the most basic code required for scraping the data from top-level pages. The for-loop that you have written so far should look something like this:

for (number in top_level_numbers) {

# console output for progress monitoring

cat("Working on entries beginning with entry", number, "\n")

# alter the URL by substituting the page number

url <- paste0("https://www.imdb.com/search/title/?title_type=feature&num_votes=25000,&genres=sci-fi&sort=user_rating,desc&start=",

number,

"&ref_=adv_prv")

# read the HTML code for the page at the specified URL

page <- read_html(url)

# extract movie titles

title <- page %>%

html_elements(".lister-item-header a") %>%

html_text()

# extract movie release year

year <- page %>%

html_elements(".text-muted.unbold") %>%

html_text()

# extract movie director

director <- page %>%

html_elements(".text-muted+ p") %>%

html_text()

# extract movie runtime

runtime <- page %>%

html_elements(".runtime") %>%

html_text()

# extract the gross profit

gross_boxoffice <- page %>%

html_elements(".sort-num_votes-visible") %>%

html_text()

# extract IMDb rating

imdb_rating <- page %>%

html_elements(".ratings-imdb-rating strong") %>%

html_text()

# coerce the number to a character so list entry is called by name (names are class char. not int.)

entry_number <- as.character(number)

# combine top-level and nested page scraping results into a data frame and store at the respective entry

store_data[[entry_number]] <- data.frame(title, year, director, runtime, gross_boxoffice, imdb_rating)

}

All that’s left to do for the time being is to construct a data frame from the data we have scraped so far and marvel, yet again, at how easy that was:

# bind the list of stored data frames into a single data frame

imdb_data <- bind_rows(store_data)

# preview the data frame and view it's dimensions

glimpse(imdb_data)

# view the data frame

View(imdb_data)

In the next post in this series I will demonstrate how to scrape data from nested pages that are linked to each of the top-level pages we have used here. We will then bring everything together to complete the construction of our scraped data set.

As an added bonus, at the end of the next post I will include the script that I wrote to construct the same data set when learning to use the rvest package. See you then.

Thanks for reading. I hope you enjoyed the article and that it helps you to get a job done more quickly or inspires you to further your data science journey. Please do let me know if there’s anything you want me to cover in future posts.

Happy Data Analysis!

Disclaimer: All views expressed on this site are exclusively my own and do not represent the opinions of any entity whatsoever with which I have been, am now or will be affiliated.